ChatGPT and related AI developments have captivated the mainstream media and public’s attention in recent months. In our guest post, we're tackling the whats and whys.

And who better than Recordsure’s Chief Technology Officer, Kit Ruparel, to look under the hood of the hype?

Kit, who leads the innovation and technology teams that have made Recordsure’s unique concepts a reality, offers his compelling expert insight into the utility of the current generation of language AI.

The language-science battleground

“No one would have believed, in the early years of the twenty-first century, that human affairs were being watched by the seemingly limitless compute of AI. No one could have dreamed that our words were being scrutinised as someone with a microscope studies creatures that swarm and multiply in a drop of water. Few people even considered the possibility of AI that learned to communicate.

And yet, across the Gulf of Silicon Valley, wallets immeasurably superior to ours regarded our language with envious eyes, and slowly, and surely, they used our words against us…”

2022 & 2023 will be remembered as the years that computational language science became mainstream – or more precisely, as the years that ChatGPT entered the public consciousness, refocusing the vision for human-machine interaction.

Despite previous companies’ and media attempts to generate excitement (or fear) about ‘Artificial Intelligence’, this was the development to-date that has most resonated with the masses.

In part, this was due to the utility of the product, as something that could answer questions and write copy for you; and whilst AI has seen previous mass utility in many areas, including Internet search, GPS route-finding and online product recommendations, none of these have grabbed the public attention so immediately, nor held it for so long.

To be human

As many other applications over many decades have demonstrated, today’s computers can now do what was only dreamed as possible by humans yesterday.

The reason that ChatGPT and its rapidly evolving competitors have continued to both dominate headlines, and to change the narrative at a geopolitical level, is because this branch of AI mimics human behaviour using the most emotive delineator of our species: our language.

Language is implicitly intertwined with human culture and evolution – and crucially, is the single most essential enabler in the progression of humankind. Language is used to store human knowledge, to represent it, and to rapidly communicate the information required for collaborative innovation.

Does AI now ‘understand’ language?

Probably not, at least not in the way that a human understands information conveyed by language.

And for organisations looking to capitalise on either building products – or in using the slew of new AI technologies now available to them – distinctions like these are perhaps the most relevant aspects of such AI to fully appreciate.

Let’s consider the component mechanisms of the ‘GPT’ family of technologies, with the clues being in that abbreviation of ‘Generative Pre-trained Transformer’.

In the beginning...

The first important aspect of that GPT apparatus then is the word ‘Generative’ – i.e., this class of technology is for generating, or creating, novel content such as responses to questions.

The first chatbots described as ‘AI’ (or what AI was considered to be at the time) were developed in the 1960’s, with machine learning aspects described even earlier in the 1950’s.

However, it wasn’t until the more advanced ‘deep learning’ innovations of 2012 around image, speech and language AI using Convolutional Neural Networks – most notably by Geoffrey Hinton et al – and the invention in 2014 of Generative Adversarial Networks (Ian Goodfellow et al) that the form of the modern ‘generative chat’ AI that we see today started to take shape.

Amongst the corporate behemoths, Google was the company that most immediately capitalised on such advances. They hired these esteemed fellows and many others out of academia – resulting, in 2017, in the most important recent breakthrough that has lead to many families of products: Ashish Vaswani et al’s ‘Transformer model’ … as seen as the concluding letter of the GPT abbreviation.

The reason that ChatGPT and its rapidly evolving competitors have continued to both dominate headlines, and to change the narrative at a geopolitical level, is because this branch of AI mimics human behaviour using the most emotive delineator of our species: our language.

Kit Ruparel, Chief Technology Officer, Recordsure

Under the covers, much of AI is focused on understanding the relationships between ‘things in a sequence’. In Language science, those ‘things’ are words, and the ‘sequence’ is typically a sentence or a series of sentences.

Transformers are essentially better and faster at learning the complex relationships between a greater number of things, in much longer sequences, than previous Machine Learning techniques. Once AI understands the relationships between things within sequences, it can be used to compare sequences of things with other sequences of things – or to predict what things are missing from a sequence.

In the case of chatbots, answering your questions is essentially AI predicting what comes next in a sequence. Does this mean such AI now ‘understands’ what you are talking about? Probably not.

However, such AI now does ‘understand’ – or at least, is able to immediately recall and utilise – the relationships between words in language(s) as well as (or better than) most humans.

As an aside, many of the Google-employed inventors and other scientists working in these areas of Generative AI and Transformers went on to be founding scientists at OpenAI, the creators of ChatGPT – so you can understand both the technology re-use, as well as the naming convention in the GPT initialism!

Google’s loss, Microsoft’s gain – as the primary backers now behind OpenAI.

On the horizon...

Let’s return then to the two important words here, ‘Generative’ and ‘Transformer’, and the product that made these topics dinner-table conversation: ChatGPT.

I am regularly asked the following sequence of questions, to which the answers are generally along the lines that follow:

“Have you considered using ChatGPT within the Recordsure AI?”

Well, no – not least of which is because of the security angle.

You can only use ChatGPT by giving your data to OpenAI for their ever-and-a-day ownership and use. And as with most sensible companies around the world, we’ve strict policies about not handing over either our own trade secrets – or more importantly, the conversations, transcripts and documents that belong to the banks, insurers, wealth managers and governments that are our clients!

“So, have you considered licensing GPT for in-house use in the Recordsure products?”

Again no, in part as OpenAI is very restrictive about who is allowed to run the latest versions of GPT itself (essentially, the answer is “only Microsoft”).

And besides, we – and more importantly, our clients – wouldn’t want to pay for the industrial-sized warehouse full of compute that would be needed to run it.

“So, have you considered using any technology like GPT in the Recordsure AI?”

Well, the answer there is that we’ll take the Tonic but without the Gin, thank you very much!

That is, to-date, our clients have not brought us use-cases for Generative AI – i.e. they don’t need us to create content – whether that be chatbot responses (there are plenty of those products on the market), document or meeting summaries, nor AI generated pictures of mermaids.

The key is in the 'T'

Our clients – and so our products – need to understand stuff, and that’s where Transformer AI comes into play.

Thank you Google and the Transformer models that you’ve open-sourced for secure use in our own chosen clouds and datacentres: yes, Recordsure AI relies heavily on Transformer networks and all that went before in terms of Convolutional & Recurrent Neural Networks and the like.

And so yes, we’ve taken the bit of the ‘GPT’ innovations (conceived by minds immeasurably superior to ours) that are most relevant to our use cases and built upon them to create the product suite that is used by our clients today.

However, with just the ‘T’ element, despite recent naming trends, we thought ‘RecordsureT’ didn’t have much of a ring to it, so we called our evolution ‘ReviewAI’ instead – so we did at least get both the product utility, followed by a buzzy initialism in there somehow!

"So, what’s next for Recordsure?"

Roadmaps are a trade secret – especially how we plan to continue to advance the fields of document and speech AI ‘understanding’. Next question please.

"Ok, then what’s next for the industry as a whole in the GPT-like language area?"

No Recordsure trade secrets there – just plenty of publicly available content to regurgitate in a few points… and you can take any of the following as hints at our own product direction or not, as you please.

ChatGPT kicked off a ‘War of the Words’ – or more explicitly, a war between the corporate behemoths, as well as highly invested start-ups worldwide, about the size of their ‘Large Language Models’ (LLMs) as measured in ‘billions of parameters’.

However, please know that size isn’t everything… anyone with basic Python development skills could create a ‘trillion parameter’ model tomorrow with enough compute – but that doesn’t mean it will do anything useful.

Importantly, therefore, look for the application utility: how are companies using such LLMs and how will the industry react in order to qualitatively measure their value?

Then, whilst OpenAI – despite its name – remains a closed company with a proprietary product only usable on their Microsoft infrastructure, instead look to their competitors (and allies). And in doing so, I’ll pick out four directions for your own research from amongst many:

Direction #1:

Facebook, by judgement or unluckiness, has released (partly had leaked!) its LLaMa family of technologies – and following the leak, essentially gave up and gave over the suite to the development community. The rate of evolution by hackers around the world using both their LLaMa AI model, as well as its component parts, has been astronomical.

Direction #2:

And so, therefore, look further into the open-source industry: hackers and scientists everywhere, looking to reverse the direction of the billion-parameters war, shrinking the size of LLMs by dramatic ratios, so you don’t need a private datacentre with a budget equivalent to the GDP of a small nation to train and operate them; yet, often making claims of minimal compromise on quality.

Direction #3:

Then, look to those developments that are making use of ‘closed’ LLMs, redefining them as ‘Large Foundational Models’ (LFMs) – either by learning from them to behave as they do (e.g. Microsoft Orca), or by letting you adapt their output for your own utility, based on your own data… but only consider those that let you keep your data privately yours.

Direction #4:

Keep a watchful eye on the organisation where most language AI innovations have either occurred, or have been rapidly poached from academia. Whilst Google was beaten to the punch with their Bard product by ChatGPT, their innovations in the language AI domain, as well as with foundational machine learning technologies such as Tensorflow, have shaped much of the last eight years – and they have a habit of publishing much of what they invent for use by others.

Guarding the future

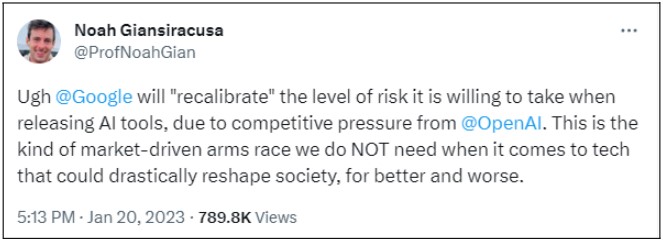

Finally, whilst I haven’t attempted to touch on the societal ethics and corporate responsibilities with regards to AI, if you would like to dip your toe into some objective and authoritative waters, I’d highly recommend starting with the Twitter feed of the esteemed Professor Noah Giansiracusa, whose ‘market-driven arms race’ metaphor in the following post inspired the genre overlay for this article:

…perhaps the same analogy could be applied to the plethora of smaller companies rushing ChatGPT and other buzzy-AI based products out the door – in order to climb the hype curve – without necessarily paying due regard to the ethical issues of privacy, bias or influence.

Language innovation unleashed

Large Language Models are in their industrial infancy and it takes time to fork through the layers of fat hype – and the many, many buzzwords – to get to the meat of where the useful innovations actually reside.

For a number of years now, Recordsure has used the most important technologies that underpin products such as ChatGPT to provide a valuable utility to our clients… and our plans are to continue such innovation through combining our own, in-house, Speech & Language AI expertise with the best of the science developed and published by others.

Images courtesy of Generative AI. No pictures of mermaids were harmed, nor copyright infringed in the making of this blog post, other than the liberties taken with Jeff Wayne’s excellent adaptation of H.G. Wells ‘War of the Worlds’, and its opening monologue as originally recorded by Richard Burton.